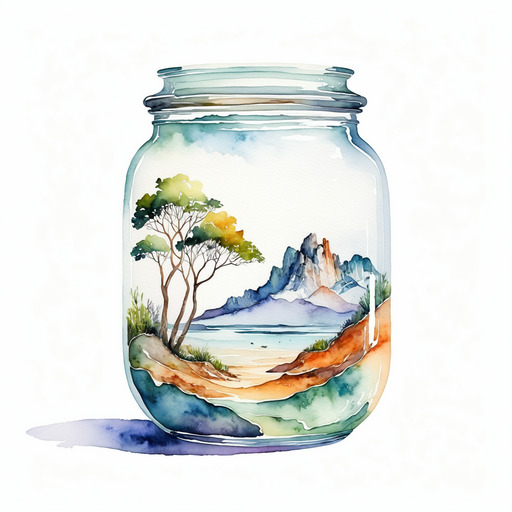

Molmo’s Favourite Image

Summary

We show a set of 64 images to the Molmo 7B-D model and ask it to rank them. It turns out that it’s favourite image of the bunch is a painting of the view onto a Mediterranean-looking bay from an open room.

Molmo 7B-D’s favourite image (of those tested)

Introduction

A lot of work (and compute) has gone into training AI systems which generate images that humans find pleasing. Although a lot of current image generation models seem to produce something with an uncanny AI sheen, compared to the outputs from just a few years ago, it seems these efforts have been successful. We also now have some pretty good Vision Language Models (VLMs) which can help us with tasks like extracting data from images, answering questions about images, and converting images to text representations.

However, nobody1 seems to be asking what the AI likes. Well, in a way those using reward models in RLHF are asking that, but they’re also simultaneously trying to make the reward models like what humans like. What if instead we ask a model which hasn’t been trained to mimic human preferences what it likes?

So that’s what we’ll do in this project: we’ll ask Molmo 7B-D — an open source VLM — to rank some images.

You can find the code for this project on GitHub.

Skip to the interactive section if you just want to see the images and rankings.

Set-up

We need two things for this project: a set of images to rank, and a model to ask for its preferences about the images.

Images

I decided to use some recently-generated AI images for this project. There are a few reasons for this:

- I don’t want any of the images to have appeared in the model’s training data. My initial idea was to use famous old artworks, but its likely these will be judged on the context of the image in the training data rather than giving us a sense of the model’s aesthetic sensibilities.

- It was easy to ensure that all the images were the same size. Cropping arbitrarily sized images is an option, but for some images cropping removes too many important details.

- Since the images I’m using are AI-generated, there shouldn’t be any copyright issues.

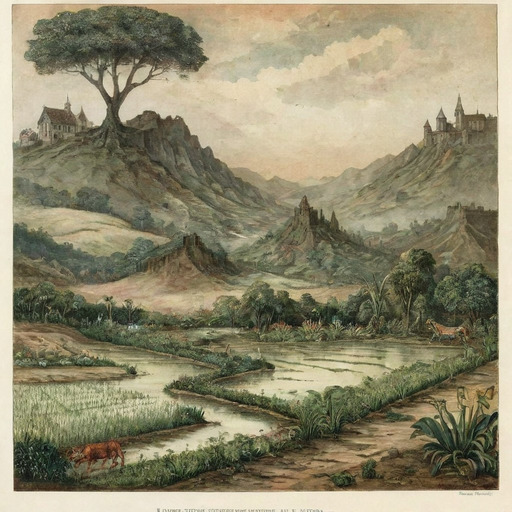

Getting the images was quite easy: I just searched on civitai. I applied a filter to search primarily for ‘watercolour landscapes’ in a square aspect ratio, and excluded humans from the search (civitai is a good resource but I’d rather not be comparing loads of photos of anime girls, so fortunately civitai makes it easy to avoid via their tagging system).

I decided to limit the image set to just 64 images, mostly because it’s just a bit of fun and I don’t want to spend a

lot of money making comparisons. Another limitation is that I was manually downloading the images from civitai, because

you tend to get lots of variations on the same prompt popping up in the results, and I wanted to ensure a diverse(-ish)

set of images were compared. I did a little bit of preprocessing to get all images to be 512px×512px .png files.

Models

There are quite a few VLM models available these days. Of course the big proprietary models like GPT-4o and Claude 3.5 Sonnet can process image input. However I opted for an open model called Molmo 7B-D. Some factors in my choice:

- Logits: it’s useful to have access to the token logits so we can infer the probability of different choices without repeatedly sampling the model. These are easy to get from open source models.

- Price: querying the big models can become somewhat expensive. For small open source models, you can often run them on your own hardware2

- Personal Development: I enjoy playing with the models at a slightly lower level than through APIs. Plus, it’s a nice way to keep my engineering skills sharp.

- Openness: I quite like how open Molmo is3, and wanted to try it. I have access to Llama-3.2, but as of writing it’s not available in the EU! Though I used the Molmo-7B-D model which does not have open data for the LLM backbone, it’s still probably technically more open than the other top models.

- Performance: Molmo-7B-D’s benchmark results are pretty good for the model’s weight class.

Analysis

We use a simple procedure: for each pair of images (A, B) in our image set, show the images side by side by side, and give the

VLM a prompt asking it to choose between the left image and the right image. The ‘default’ prompt I used is

PROMPT = """This image contains two pictures: one in the left half (L), and one on the right half (R). You must indicate which picture you prefer by simply stating "L" or "R" followed by a newline.

The image I prefer is:"""

To compute the preference, we compare the logit for the " L" token against that for the " R" token (ignoring all

other tokens4). One thing to note is that the model seems to have a consistent bias towards the image in the left

hand position. So we actually feed the model two images for each pair: image [A B] and image [B A], and we debias by

taking the difference between the logits5. We convert logits to probabilities using a temperature of 1.

An example image input for comparing images. We also compare the same image pair with the image positions swapped, in order to remove positional bias.

There are 64 images in the set, so we need to make 1953 pairwise comparisons. The code for doing so can be found on GitHub.

Once we have the comparison data, we can do some analyses. I’m still a big fan of Julia, but am limited in my day-to-day work to using python — so here I’ve decided to switch from Python to Julia. I’ve provided a Pluto notebook, also on GitHub.

Ranking

To rank the images, we compute an MLE fit of the Bradley-Terry model using Zermelo’s iterative algorithm (described here on Wikipedia). When you hover over the images in the interactive section, the log score is displayed in yellow.

Consistency Across Prompts

I tried a few different prompts, which you can see in the interactive section. These are:

- the L/R prompt, where the model has to respond ‘L’ for left and ‘R’ for right;

- the A/B prompt, where the model has to respond ‘A’ for left and ‘B’ for right; and

- the L/R art judge prompt, where we add a tiny bit of role context to the L/R prompts.

In addition to these three prompts, I also tried using

float16representations instead ofbfloat16representations, to see whether it makes much difference.

These are only minor variations, so one might expect that they give similar results. The Spearman’s rank correlation matrix below shows the ranks are highly correlated; and you can see this with your own eyes in the interactive section. However, since it has been noted that small changes in prompts (including changing option labels) can cause large changes in performance, it’s reassuring to see that small tweaks to the prompt don’t completely change the preferences.

| L/R | L/R (f16) | L/R (art) | A/B | |

|---|---|---|---|---|

| L/R | 1 | |||

| L/R (f16) | 0.9992 | 1 | ||

| L/R (art) | 0.9927 | 0.9929 | 1 | |

| A/B | 0.9962 | 0.9961 | 0.9899 | 1 |

Preference Transitivity

An obvious question to ask is whether Molmo has rational preferences. So, we’ll check whether the preferences are transitive. This means that if Molmo prefers image A to B, and prefers image B to C, then it prefers image A to C. If this is not the case, then we can set up a Dutch book in which we can extract infinite money6 from Molmo by posing as an art dealer, and offering to upgrade from image A to image B (for a price), offering to upgrade from image B to image C (for a price), and then offering to upgrade from image C back to image A (for a further price).

For any pair of images \((A,B)\) we can build a preference graph by drawing an edge \(A \rightarrow B \) if Molmo prefers \(A\) to \(B\) (i.e. logits for \(A\) are greater than the logits for \(B\) : \((w_A - w_B)>0\)), and drawing an edge \(B \rightarrow A \) if Molmo prefers \(B\) to \(A\). We then check for any cycles in the graph.

It turns out there are a lot of cycles — but it’s mostly caused by very weak preferences. For example one of the most strongly intransitive preference cycles is \(I_{6} \succ I_{57} \succ I_{12} \succ I_{6}\), but Molmo only prefers \(I_{57}\) to \(I_{12}\) 51.6% of the time. In fact, if we ignore all preferences where the preference is weaker than 52.1%–47.9% (which means ignoring about 20% of the preferences) then the cycles disappear!

An example of Molmo’s intransitive preferences. Arrows point from more to less preferred.

Interactive Gallery

Using the widget below, you can see all the images and reorder them by different rankings. Molmo consistently picks the Mediterranean Window image (Image 40) as its favourite. I think that’s a pretty good choice. Personally I also like Image 9, which Molmo ranks as upper-average; and Image 39, which it ranks consistently near the bottom.

(No prompt: sorting images by image index)

Conclusion

This has been a fun Sunday project, but I have a PhD thesis to finish writing, so there’s a lot more I could do that is on hold for now. Possible future work includes:

- Comparing more images: we don’t need to make every pairwise comparison to rank a bigger set (just as not every chess player needs to play each other for us to compute Elo). Even if we did want to stick with evaluating all pairings, my inference code could easily be made more efficient by increasing the batch size. The main challenge is actually finding a bigger set of images I care to rank!

- Personal Finetuning: can I finetune a model to share the same aesthetic preferences as myself? Essentially just giving myself a personal reward model.

- Testing Different Models: I could test other models, e.g. Molmo-72B, or Llama-3.2-11B-Vision. It would be interesting to see how correlated the preferences of different models are!

- RLAIF: can we set up a training loop to finetune a diffusion model to optimise Molmo’s preferences? I may just do this (when I get more time) — watch this space!

-

May not be true: I haven’t actually checked. I just thought of the idea and though it would make a fun project. The world is big so I’m sure someone out there actually has done this. ↩︎

-

Though in the end I ended up using a cloud provider and my total bill was probably about the same as if I had made two comparisons per image pair using GPT-4o. Though this assumes I could reliably access the relevant logits and so don’t have to resort to sampling. ↩︎

-

My stance on open source AI in general is mixed — I’m very grateful for current open models, which I think have been a great thing for AI developers and scientists. But as models get more powerful, open source AI developers have a big responsibility to make sure their models are safe, controllable, and robustly refuse to do dangerous things. ↩︎

-

It turns out that sometimes the model gives a non-answer like “Both images have their merits and cannot be compared”, but the prompts I’m using seem to mostly avoid this issue compared to some variant prompts I tried. The instruction to “simply state L or R followed by a newline” does actually seem to help in this regard. ↩︎

-

Let’s assume there’s a constant bias \(b_L\) and \(b_R\), and assume the true preference logits for \(A\) and \(B\) are \(w_A\) and \(w_B\) respectively. We want to find \(w_A-w_B\). When \(A\) is on the left the left logit will be \(w^{AB}_L = b_L + w_A\) and the right logit \( w^{AB}_R = b_R + w_B \). In the flipped image, \(w^{BA}_L = b_L + w_B\) and \(w^{BA}_R = b_R + w_A\). So we can compute \( (w^{AB}_L - w^{BA}_L) - (w^{AB}_R - w^{BA}_R) = (b_L + w_A - b_L - w_B) - (b_R + w_b - b_R - w_A) = 2(w_A - w_B) \). ↩︎

-

Well, that’s the argument, anyway… ↩︎